VIEW THE GALLERY

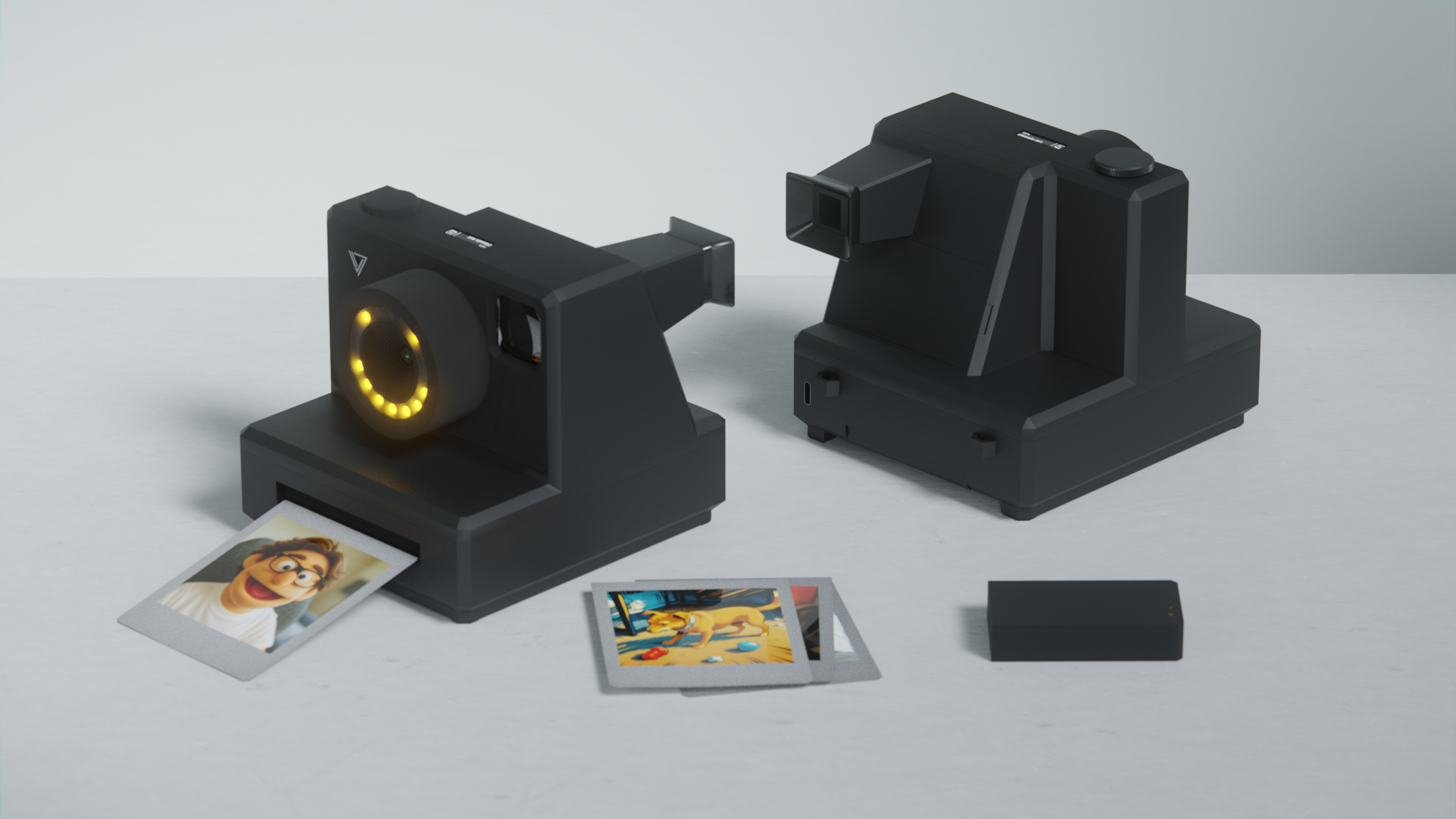

Visionairy Cameras is an experimental line of 3D printed cameras that merge the tactile joy of instant photography with the expressive power of modern AI. It began with Instagen, a retro-inspired instant camera that prints stylized photos, and evolved into Luminaire, a digital-first device for high-quality photos and video.

I set out to explore how AI could transform the act of capturing photos, and built a system that balances nostalgia with modern generative tools while keeping the experience simple in the hand. On the device, capture is fast and legible; off the device, a media processing server handles classification, prompt selection, and modular processing pipelines. I designed the operating system for the cameras - Chronos UI - for clarity and speed, a gallery that surfaces stylized results with originals a tap away, and a LED light language on the hardware for status and feedback. This was my first end-to-end hardware venture, combining product definition, interaction design, CAD and enclosure design, electronics integration, and software systems work to ship two working cameras and a scalable backend that can keep improving without rewriting the device.

Today’s cameras are incredible, yet most images feel weightless. They appear in an endless stream, get a few seconds of attention, and slip into the archive to be forgotten unless highlighted later. Capture has become effortless; meaning has not. We get speed, resolution, and automation, but very little ritual, intention, or memory you can actually hold.

At the same time, a counter-trend is gaining momentum. Film photography is resurging, especially among Gen Z. Disposable cameras are a summer staple, refurbished Polaroids sell at premium prices, and film sales keep ticking upward year over year. Digital natives are reaching for analog tools because they slow you down, make each shot deliberate, and produce something tangible you can pass around.

In parallel, AI image generation has opened a new frontier. Tools like MidJourney, Stable Diffusion, and DALL·E let anyone transform a photo into an imaginative work or generate an image from scratch. For the first time, everyday users can not only capture reality but also reimagine it in seconds.

The gap between these worlds - the intention and tactility of film on one side, the expressive power of AI on the other - is where Visionairy cameras sit. The opportunity is clear: build a camera that blends analog joy with creative AI tools so each photo becomes both a memory and a canvas.

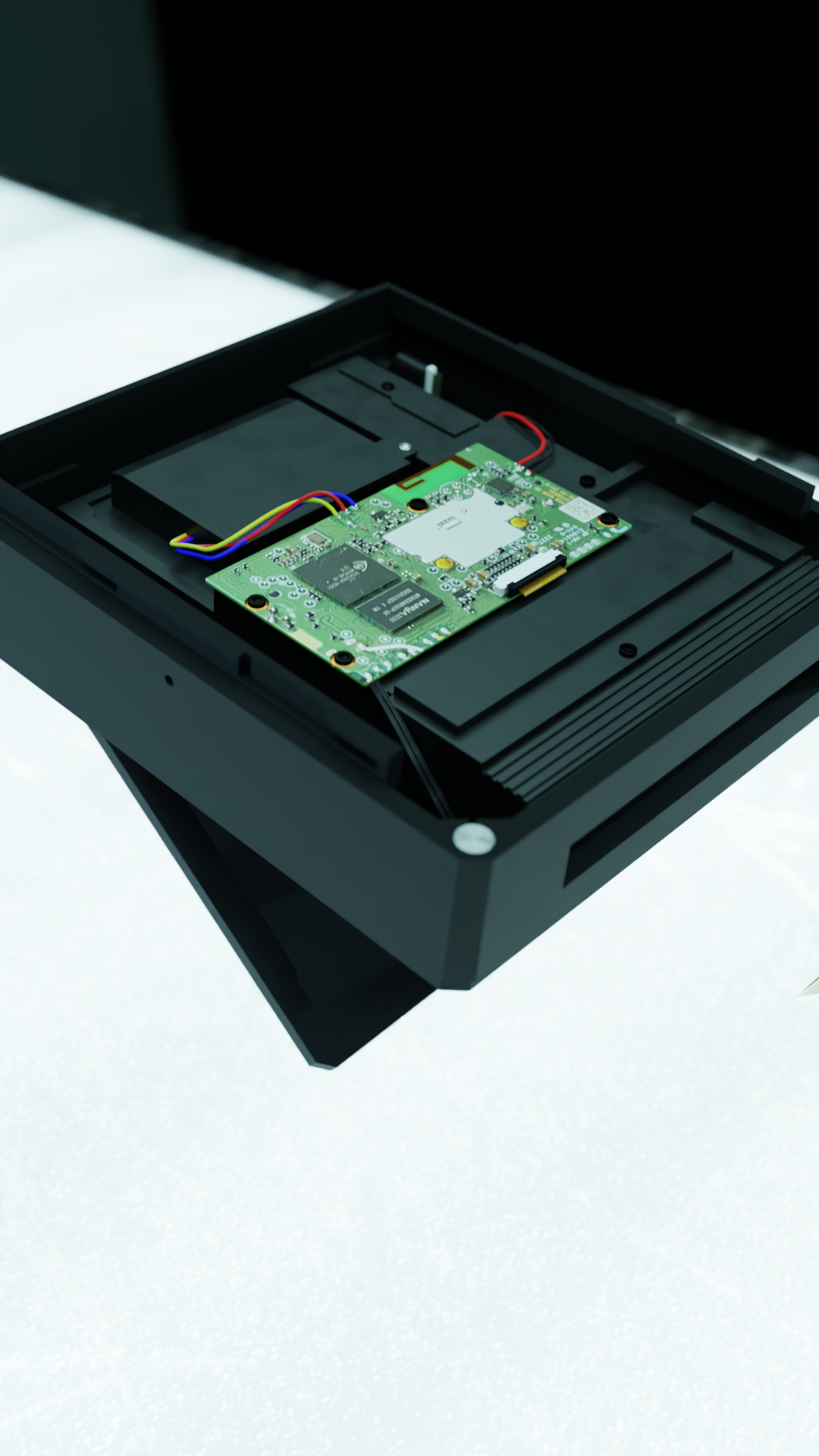

I built the first Visionairy cameras from Raspberry Pis and other off-the-shelf components: the RPi camera module, PiSugar power board, a compact instant printer, LiPo batteries, LEDs, switches, and a rotary encoder. The goal was a standalone camera that could boot, capture, and deliver results without a laptop tether. Starting with modular parts let me swap pieces quickly and learn where the real constraints lived.

At the time, Raspberry Pis were nearly impossible to find. I happened to have one powering a smart mirror I had built, complete with a camera module for facial recognition. I repurposed it as a test bed: capture an image, run it through DALL·E’s image variation endpoint, and output a restyled version. The results weren’t perfect - the API offered no text prompts, only fuzzy dream-like image-to-image variations - but the workflow proved viable. For the first time, I could snap a photo and see it reimagined by AI.

I wanted a handheld body next. I bought a 1970s Polaroid OneStep off of Ebay, disassembled it, felt really bad about what I had just done to a piece of history, and then tried to fit a Pi, the printer, and the camera module inside. Reality pushed back. The printer loads film from the bottom while the original body expects film from the front. Space was tight, cable routing was unforgiving, and the door mechanics mattered more than any drawing suggested.

Working with Mike Mahn as a creative collaborator made this possible. Mike helped design and model the internal components and taught me the basics of 3D printing so I could iterate quickly and print reliable mounts. After multiple rounds, we kept the vintage faceplate and designed a custom base that finally captured, processed, and printed.

It was not pretty, but it worked, and it taught me what would matter later: reliable power, clear status indicators, fast recovery from errors, and inputs simple enough to use on the fly. It also surfaced the limits of running everything locally, including battery drain, heat, and a clumsy developer workflow that required SSHing into the device to change models or parameters. The lessons from this build led directly to the fully custom body and to moving heavy processing off the camera.

As a side note, I have one of any Polaroid OneStep part that you may need if you're looking for a replacement. Seriously. Reach out if you need a part.

These scrappy beginnings weren’t just technical milestones; they were design lessons in working within extreme constraints. They set the stage for Instagen and Luminaire, where form and function came together in more refined, deliberate ways.

These early prototypes taught me that I need to keep the device simple and resilient. If a network hiccup or a thermal spike stalls the pipeline or drains the battery, the experience collapses, so the camera has to fail gracefully and recover fast. Feedback should be glanceable and honest. Lights, short labels, and clear states beat dense UI on small screens, because users need to know what is happening without hunting. Inputs should map to the actions people take most, with everything else one step deeper. That is why a single knob can carry style selection and printer control, and why the screen shows only what matters in the moment.

The other lesson was architectural. Heavy work belongs off the device so the camera stays responsive and battery friendly. Moving processing to the server made iteration practical, let pipelines evolve without reflashing hardware, and turned status into something observable end to end. Those principles came directly from the early pain points of testing outside, and they shaped the final cameras as much as any CAD file or UI mockup.

Visionairy cameras aren't meant to compete with professional cameras. The goal was to design for play, creativity, and sharing - experiences that feel fresh and fun while still being intentional. I identified three primary use cases and built the interfaces of Instagen and Luminaire around them.

For people who enjoy taking photos but want to see them transformed into something unexpected. A picture of your dog, your room, or your lunch comes back as an anime frame, a cartoon sketch, or a painted landscape. The fun comes from contrast - the image is still recognizable, but it looks like art from another world. It's really fun to see something that you recognize but drawn as a cartoon, or a pencil sketch. It delivers a new perspective on what you're looking at and can immediately transport you to a stylized parallel universe.

To support this, both cameras make it easy to select and cycle through a library of different styles before capture. The system always feels lightweight: choose a style, take a photo, and see the AI reinterpretation moments later.

Another core use case was photos of friends and group social events. Taking photos of friends at a party or in the park should feel less like a utility and more like an event. That’s why Instagen includes a built-in printer: a photo becomes not just an image on a screen but a physical takeaway, something to laugh over and pass around.

For this, maintaining consistency with human appearances is crucial. You need to be able to recognize your friends and yourself, because if they look like completely different people you may as well be looking at a photo of strangers. This meant developing pipelines with models that maintain temporal consistency and integrate tools like face swapping to maintain subjects' recognizability.

Every capture also needs a home. All media is uploaded to an online gallery tied to each user account. The gallery shows stylized versions first, with originals a tap away. If a capture isn’t restyled, it simply appears as a standard photo or video. This design reinforces the creative focus without hiding the authenticity of the originals.

In Luminaire, the gallery is built directly into the Chronos UI. While a photo or video is being restyled, its cell in the gallery displays a real-time status animation, gradually revealing the image as it’s generated. Final outputs are clearly labeled as “generated,” keeping originals and AI versions easy to distinguish.

Running AI on the camera made it hot, hungry, and hard to iterate. So I moved the heavy lifting off the device. The Media Processing Server keeps the cameras quick and friendly while the backend handles everything computationally expensive and easy to change.

Here is the flow in plain terms: After a capture, the camera bundles a tiny ticket with the user, media path, selected style, and options. It uploads the media and ticket through a lightweight API, then checks in for status while the job runs. On the server, the job is queued and handed to a Classifier Service that labels the shot type and picks the right prompt variant for that class. That decision maps the capture to a pipeline built for the look. Pipelines can add steps when needed, like face preservation for portrait styles or stronger texture work for illustration styles. When processing finishes, the server attaches metadata that explains what happened, sends the result back to the device, and publishes it to the web gallery.

Why this matters for design is simple. The camera UI stays fast and legible because it is not wrestling with models. I can add or swap pipelines and improve output quality on the server without reflashing hardware. Status is visible end to end, which builds trust while users keep shooting. This architecture is the backbone of Instagen and Luminaire, and it is what makes classifier-driven routing, modular styles, and continuous improvements feel effortless in the hand.

Before any styling, the Classifier Service looks at the capture and gives it a simple label, for example portrait, landscape, or still life, and writes a short description of the scene. Every supported style in Visionairy is a prompt family with variants tailored to each class. The artistic essence stays consistent while phrasing fits the subject.

Example:

Still life: “a watercolor photograph with flowing splotchy patchwork”

Portrait: “a portrait in the style of a watercolor photograph with flowing splotchy patchwork”

Classification also selects a pipeline. Pipelines differ by purpose and can add steps dynamically. A portrait in a detail-forward style runs face-preservation steps to keep the subject recognizable. An illustration-heavy style leans into texture and stylization. Because pipelines are modular, I can introduce new styles by adding class-specific prompts and pointing them to an existing pipeline, or spin up a new pipeline without touching the device UI.

This routing method means Instagen isn’t just throwing the same filter at every photo, it’s choosing the right model for the right moment, automatically. By classifying first and then picking the pipeline, we’re able to push each image into the environment where it can shine, whether that’s a delicately inked illustration, a glossy fashion edit, or a moody cinematic frame.

Once the media is in the right lane, our processing service takes over - running the job from start to finish, saving the output, and attaching all the metadata that tells the story of how it was made. As soon as a result is ready, the server notifies the camera and real-time status updates flow back down the wire, so the user can always see where things stand: queued, running, or complete. Each camera shows job status differently. The Instagen plays different animations on its LED ring as well as displaying in plain text on the small OLED screen. The Luminaire creates a new slot in the image gallery which displays real-time status updates for processing jobs. Once the processing is done the new photo or video is automatically loaded and displayed.

This flexible structure means the system can generate prompts that feel natural to the subject without asking the user to make extra choices. The user picks a style. Everything else is handled automatically and tuned to deliver the best possible output.

This architecture also makes it easier to develop, test, and push new and different types of processing pipelines, since they are modular and easy to add or swap. While working on the project I developed a "poetry pipeline" that writes a verse about the scene and renders it into the print.

Nowadays the majority of our media are digital. Flat, non-physical artifacts of memories at constant risk of being forgotten or deleted. Pictures meant more when you had limited captures and had to endure an entire post-processing stage to get them. Instagen captures are intentional, fun, and tangible.

Instagen is an Instant Camera reminiscent of popular models from the 1970s. From its bold silhouette to its built-in printer, Instagen is designed to turn heads and print memories.

To illustrate how the camera is assembled and the number of parts involved I created this animation. It shows the old V1 build, but is an accurate reflection of the level of detail involved in constructing the device.

The pièce de résistance of the build and by far the most challenging part to design for. Nothing good comes easy! Not only did this required a special chassis to mount onto, which was full of weird little details from the manufacturer, it also required us to build a hinged door to load the film cartidges through. This door also contains spring-loaded arms to apply strong pressure to the film cartridge as well as a locking mechanism which intfaces with special 'open-close' sensor attached to the printer.

The software to incorporate this printer into the system was developed by a developer in the Netherlands and was implemented by Mike Manh, who I mentioned earlier. Mike developed a beautiful solution to manage the complex Bluetooth handshake that takes place between the Pi and printer on startup, and worked to improve the packet transfer speed between the two devices to reduce print time. Without these two, Instagen would be mothing more than a what should have been a mobile app made more complicaded and shoved in a plastic box.

Sitting neatly on top of the camera, the Instagen’s OLED screen keeps you in the loop without getting in the way. It’s your quick-glance guide for choosing styles, checking how many prints you’ve got left, and seeing live status updates while the camera powers on or processes a shot.

Designed to be simple and glanceable, the display adds just enough feedback to make the Instagen feel alive - like a little heartbeat that lets you know your next capture is on the way.

With only a small OLED screen and a single rotary knob, the UI had to be stripped to essentials - printer state, style selection, and photo count. The knob both cycles styles and toggles the printer on/off, ensuring multifunctionality without clutter.

The lens is composed of multiple parts which house the camera module, lens glass, and LED ring. Sandwitched and locked together, the entire lens piece locks into the faceplate, also alowing for easily swappable designs.

The LED ring is used as both a ring light flash, pre-flash indicator, processing status indicator, and print status indicator. It's a fun, somewhat mesmerizign way to clearly indiacate what the camera is doing and add some visual flare.

Isn’t it the worst when you run out of juice? Never miss a capture with Instagen’s swappable batteries! Each pack is designed to slide in and out with ease, so you can carry spares and keep shooting without waiting on a recharge.

The custom battery modules were built specifically for Instagen, giving the camera a longer lifespan and a more reliable power system. Whether you’re out on an all-day adventure or just forgot to top up before heading out, swapping in a fresh pack takes only seconds. It keeps Instagen feeling like a real camera - always ready to capture the moment, no power anxiety required.

The brains behind the operation is a Raspberry Pi Zero W 2. This little computer which is smaller than a credit card packs 512MB SDRAM with Wi-Fi and Bluetooth capabilities. Since all image processing is handled off-device, it's the perfect balance of size and speed.

Powering the Pi is a nifty little power management hat manufactured by PiSugar. This board allows the camera to run on battery power and hosts the on/off power switch along with a sleep/wake button, making it easily portable and super convenient to power the camera on and off.

As I was wrapping up development on Instagen, AI video restyling models were quickly improving, and I wanted to explore how they could fit into the creative pipeline I had built. Instagen had proven the magic of blending old-world hardware with new AI capabilities, but it was purpose-built for instant prints. To push further, I needed a new camera designed for capturing both photos and video... something modern, portable, and versatile. That’s what led me to build Luminaire, the second camera in the Visionairy line-up.

Luminaire was built from the ground up as a digital-first device. It’s small enough to slip into a jacket pocket, with a sleek ergonomic body and a knurled textured grip that makes it feel like a premium tool. Running on my custom Chronos system, it supports HDR capture, manual camera controls, and an onboard media gallery where you can view both originals and AI-generated results. In short, Luminaire represents the next stage of the Visionairy cameras: a portable device that brings the possibilities of AI restyling into everyday photo and video capture.

The 3.5 inch touch screen is about interaction, not ornament. It gives you a live viewfinder with minimal lag, quick access to the most used controls, and clear camera status at a glance. Network state and battery level remain visible so you always know if a capture will upload or if you should conserve power. Critical actions sit within easy reach to keep hands on the camera and eyes on the scene. The result is a control surface that supports fast decisions in the moment, reduces mode switching, and keeps the shooting flow uninterrupted.

Powered by a Neopixel Stick, the 8-LED array provides both a powerful flash as well as a status indicator to inform photograph-ees of what's happening camera-side.

There are dedicated animationsfor:

The Luminaire’s body features a knurled texture that’s printed directly into the shell - a detail taht took a bit of iteration to get correct. The result is a tactile, ultra-premium feel that elevates the camera beyond a prototype. It’s not just visually striking, it feels great in the hand, giving the device the same satisfying grip you’d expect from high-end industrial design.

I have and continue to learn so many different things throughout this project, and branched far out of my comfort zone into different diciplines. From design, to software development, to hardware fabrication - each step opened a new opportunity area to research and grow into. That's why I've been working on this project for so long - it's offered many opportunities to grow and explore.

While embedding AI into products and hardware is now a common thing, when I started in April of 2023 these concepts were brand new and identifying core use cases for how AI-driven tools can create evergreen experiences was new and largely unexplored. It helped me immensely when I transferred from Spark to working on AI wearables at Meta.

Instagen has grown far beyond a single prototype, and the next step is opening it up to others. I’m currently working on making the cameras available as DIY build kits, complete with the necessary files, parts lists, and guides so that anyone can create one themselves.

In parallel, I’m open-sourcing the Chronos and Chronos-Headless apps, making it possible to run the cameras out of the box and giving the community a foundation to build on. The goal is to transform Visionairy cameras from a personal experiment into a platform for collaboration and creativity, where others can extend, remix, and push the work in directions I can’t imagine alone.

A lot of people told me that I should productize these cameras. Truthfully, I don't believe this a viable product in its current form. If I were to try and manufacture these cameras and sell them, they would be quite expensive and require a subscription model to use. The output is cool, but ultimately gimmicky and does not hold up to longstanding use. It's not solving a real problem, it's a fun toy. But that is in physical form. As a mobile app, this thing has legs. Just look at what happened when OpenAI released their image model.

So yes, I am currently building that :) And at the time of this writing it already works.

Watch this space! Thank you for reading.